In the last few years, there is serious growth in the domains of Augmented reality (AR) and Virtual reality (VR) mostly due to the rapid advancement and proliferation of the associated hardware, as well as the increasing general public interest in the sector. While this rapid advancement is clearly evident in the underlying technology, such as motion sensors, stereoscopic displays and integrated surround audio speakers, the actual interaction methods themselves have a huge overlap with the more traditional HCI methods found in conventional, non-augmented system. This, of course, is expected and even encouraged, as both the software developers and the end users are quite familiar with these already established HCI methods (e.g. using an on-screen cursor to select elements) and can act as a de-facto bedrock for both parties to build upon. However, there is another side to the proverbial coin. These already established HCI methods are often the product of hardware or computational limitations that are no longer present, catering to a fundamentally different kind of computational system to the XR systems that end up being applied, necessitating the use of specific work-arounds in order to be viable there, which may often impact the actual usability of the system or productivity of the users for the sake of prior familiarity. The main aim of this paper is a comprehensive and systematic cataloguing of traditional HCI input methods and the comparison between them and more bespoke methods, developed specifically for XR environments, in order to provide a foundation for achieving more usable, intuitive and practical environments for XR technology users.

Javascript must be enabled to continue!

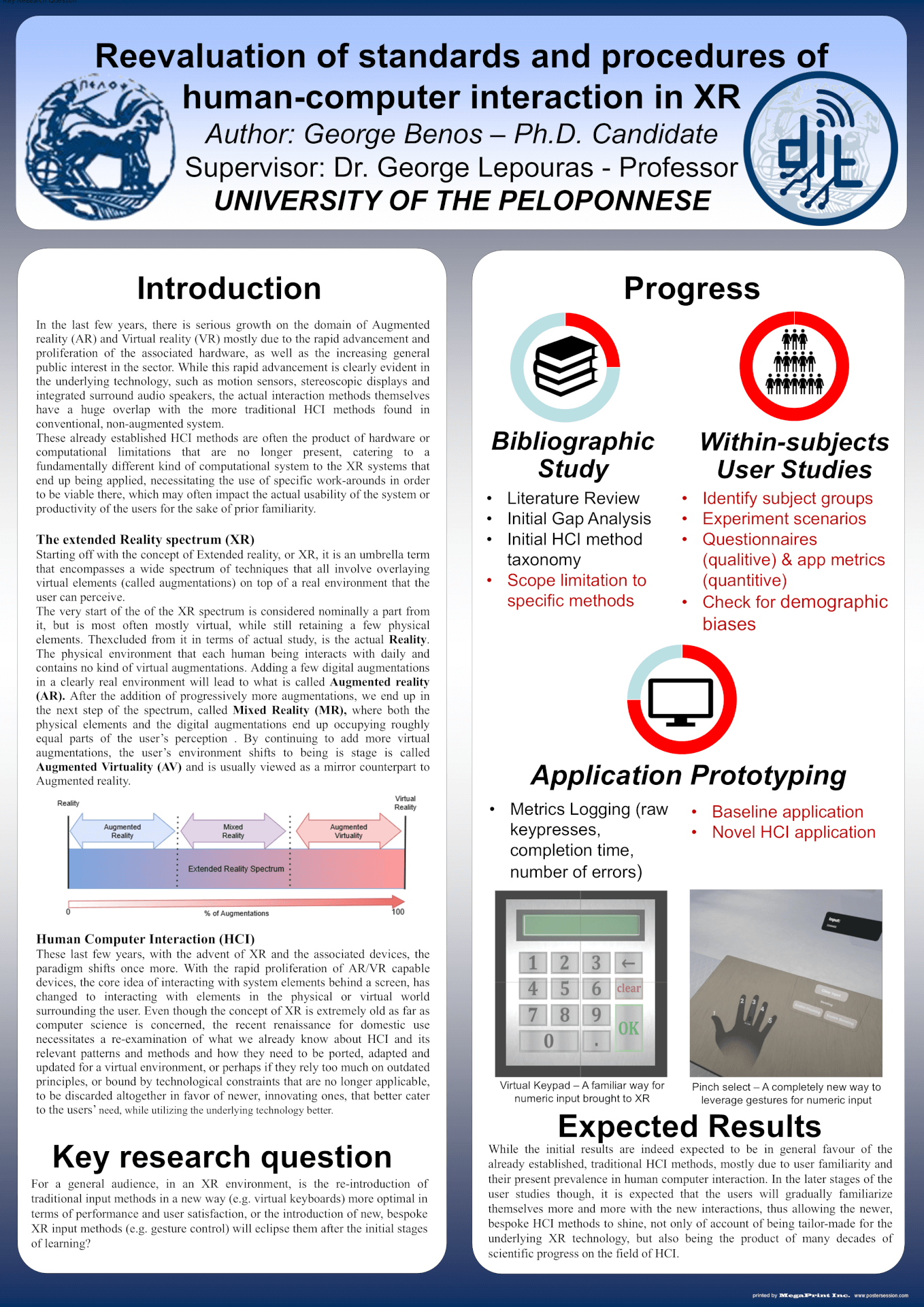

Reevaluation of standards and procedures of human-computer interaction in XR