The Experiential Journey: Fostering Fun, Exploration, and Critical Thought in Human AI Co-Creation.

Authors: Eleana Pandia, Panagiotis Livadas, Elektra Vouza.

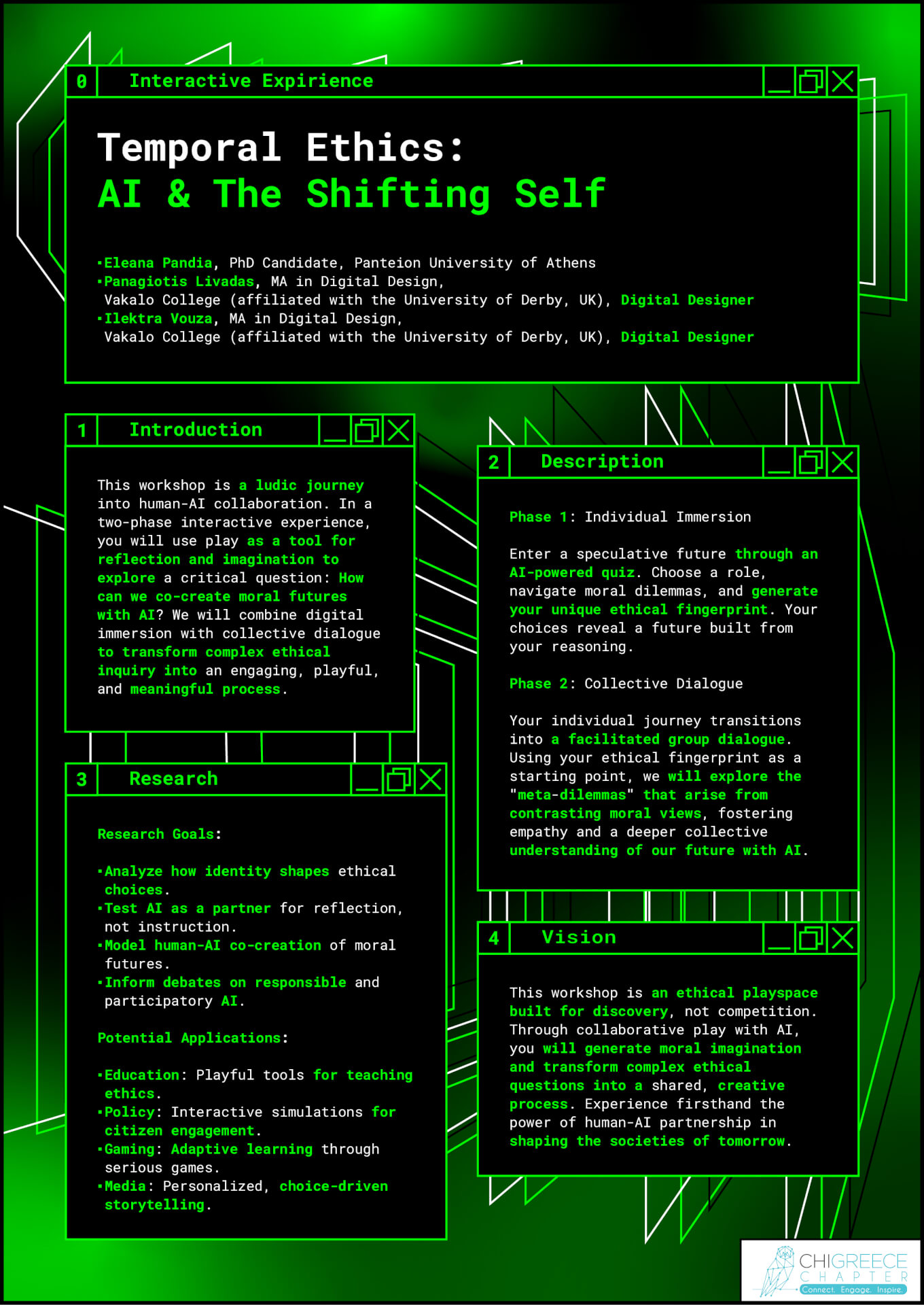

Imagine stepping into a world just beyond the horizon of the present: a post- anthropocentric society where questions of identity, agency, and responsibility are unsettled, and where your traveling companion is not another human, but an artificial general intelligence (AGI). This is the entry point to The Experiential Journey, a workshop that invites participants into a narrative of ethical exploration and playful co-creation. Here, winning or losing is irrelevant; what matters is what you discover about yourself, about others, and about the futures you might imagine together.

The journey begins with a simple yet disarming choice: do you walk forward as The Kid, full of untapped potential; as The Present Self, grounded in the present; or as The Old Man, seasoned by hindsight? This temporal self-simulation (Parrott et al., 2019; Hershfield et al., 2011) alters how the AGI speaks to you, reshaping dilemmas through the eyes of innocence, urgency, or wisdom. At each fork in the path, the AI poses new challenges, such as freshly conjured scenarios spun in real time through procedural content generation (Guzdial & Riedl, 2019). These dilemmas draw you across three axes: into the depths of Self, the complexities of World, and the trajectories of Evolution (Floridi, 2019).

Your choices are of the outmost importance. The AGI weaves them into twists in the unfolding story that mirror your stance. With each step, you accumulate an “ethical fingerprint,” a profile that quantifies not only how fair, sustainable, or hopeful your responses may be, but also what kind of world your decisions are building. At the end of this path, your fingerprint blossoms into a short filmic vignette: a glimpse of the future you have co-authored, a glimpse of your personalized fantasy world. It is abstract values and fleeting thoughts rendered as landscapes you can almost touch.

The journey does not end in solitude. As travelers return from their individual quests,they find others carrying different fingerprints. Some worlds harmonize while others clash. Here the story opens into a shared space: participants gather around a figurative “campfire” where worlds collide and intermingle. Guided by facilitators, they confront meta-dilemmas distilled from their collective data, weaving disparate threads into dialogue. Groups negotiate, debate, and ultimately begin sketching the outlines of a “meta-world”, a collaborative design that embodies both tension and synthesis, echoing traditions of collective intelligence (Larson, 2017; Dellermann et al., 2019).

Beneath its playful surface, the workshop is a live research platform. It collects empirical insights into how people reason morally across temporal identities and under conditions of uncertainty, building on work such as the Moral Machine experiment (Awad et al., 2018). It probes the potential of AI to serve as a reflective agent, facilitating rather than dictating ethical engagement (Coeckelbergh, 2015; Gunning et al., 2019). It also contributes to broader discussions of responsible AI (Dignum, 2019) by exploring co-creative processes where humans and machines jointly articulate values and futures.

The anticipated impact extends far beyond the conference setting. The methods and data can inform the design of educational curricula, serious games, and participatory policy processes that integrate ethical deliberation into everyday practice. They also suggest new paradigms for digital experiences that are not merely personalized but philosophically meaningful. Above all, The Experiential Journey demonstrates that ethical reflection in the age of AI need not be abstract, inaccessible, or dour. Instead, it

can be playful, affective, and deeply human. An imaginative adventure where participants and AI together chart the landscapes of tomorrow.

References

- Awad, E., Levine, S., Anderson, M., & Conitzer, V. (2018). The Moral Machine experiment. Nature, 563(7729), 59–64.

- Braidotti, R. (2019). Posthuman Knowledge. Polity Press.

- Coeckelbergh, M. (2015). Robot Ethics. MIT Press.

- Dellermann, D., Ebel, P., Söllner, M., & Leimeister, J. M. (2019). Design principles for human-AI collaboration. Proceedings of ECIS.

- Dignum, V. (2019). Responsible Artificial Intelligence. Springer.

- Floridi, L. (2019). The Logic of Information. Oxford University Press.

- Gunning, D., Stefik, M., Choi, J., Haake, T., & Liu, S. (2019). XAI: Explainable artificial intelligence. Science Robotics, 4(37), eaay7120.

- Guzdial, M., & Riedl, M. O. (2019). Explainable AI for games. Proceedings of AIIDE,15(1), 162–168.

- Hershfield, H. E., et al. (2011). Increasing saving behavior through age-progressed renderings of the future self. Journal of Marketing Research, 48(SPL), S23–S37.

- Larson, K. (2017). Collective intelligence and the future of work. MIT Sloan Management Review, 58(3), 1–5.

- Parrott, A., de la Rosa, S., & Schienle, A. (2019). Virtual reality exposure and futureself-continuity. Frontiers in Psychology, 10, 2307.

- Russell, S. (2019). Human Compatible. Viking.